Introduction: The Enterprise Shift from AI Pilots to Outcomes

AI has moved decisively from experimentation to expectation.

Artificial intelligence is now a top priority for organizations across industries. Leadership teams are being asked to invest in AI and show results, yet many organizations struggle to move beyond pilots. AI initiatives often start with enthusiasm—new tools, promising use cases, early proofs—but quickly become fragmented, making it difficult to explain what value AI is actually delivering.

The journey from initial AI interest to measurable outcomes is rarely straightforward. Questions emerge early: where to start, how to manage risk, and how to justify continued investment.

In our work with enterprise customers, we see this pattern consistently. Most AI programs slow down when a few fundamental questions remain unanswered:

- Is the data truly ready to support AI at scale?

- Are security, compliance, and responsible AI considerations built in early enough to allow production use?

- Which AI initiatives are worth investing in, given the effort, cost, and expected return?

These questions are often addressed separately—by different teams, at different times. The result is disconnected decisions and stalled progress. In practice, AI only scales when readiness, risk, and ROI are considered together.

That’s where the AI Readiness, Risk & ROI Assessment Framework from Relevance Lab comes in. It reflects what we have learned from delivering AI and data platforms in real enterprise environments. The framework helps enterprises move beyond fragmented experimentation by focusing on three connected pillars: readiness, risk, and ROI.

Why Most AI Programs Stall

AI initiatives rarely fail because the underlying technology does not work. In most enterprise environments, the tools perform as expected. Models generate insights, copilots respond intelligently, and automation demonstrates potential value in isolation.

Programs stall for a different reason: a lack of clarity across three foundational questions.

First, are we ready?

Readiness goes beyond tooling. It includes data quality and accessibility, platform and integration capabilities, operating processes, and organizational skills. Without these foundations, AI remains difficult to operationalize and nearly impossible to scale.

Second, are we managing risk responsibly as adoption expands?

As AI moves from experimentation into production, concerns around security, compliance, explainability, and responsible use can no longer be deferred. When governance is added late, it often slows or halts deployment altogether.

Third, where will AI deliver ROI, and over what time horizon?

Without a clear link to business value, AI initiatives struggle to compete for funding and executive attention. Promising pilots fail to progress because the expected return is unclear or unmeasured.

Most assessments and maturity models address only one of these questions. Some focus exclusively on technical readiness. Others emphasize governance or compliance. Still others prioritize use case identification without evaluating whether the underlying foundations are in place.

Real progress requires addressing readiness, risk, and ROI together, in a coordinated and integrated way.

Bringing Readiness, Risk, and ROI Together

To make AI work at scale, your organization must keep three critical goals in balance:

- Readiness: Making sure your data, systems, talent, and processes can support AI.

- Risk: Ensuring your adoption of AI remains safe, secure, and compliant.

- ROI: Choosing AI projects that are measurable, purposeful, and tied to business goals.

These pillars are tightly connected. Strong technology without governance can create serious risk exposure. Strong governance without a clear ROI strategy often leads to stalled initiatives and wasted effort. And without foundational readiness, even well-funded and well-intentioned AI programs fail to scale.

Successful enterprise AI adoption is not about optimizing one pillar in isolation. It requires aligning readiness, risk, and ROI from the outset and managing them together as AI moves from experimentation into everyday operations.

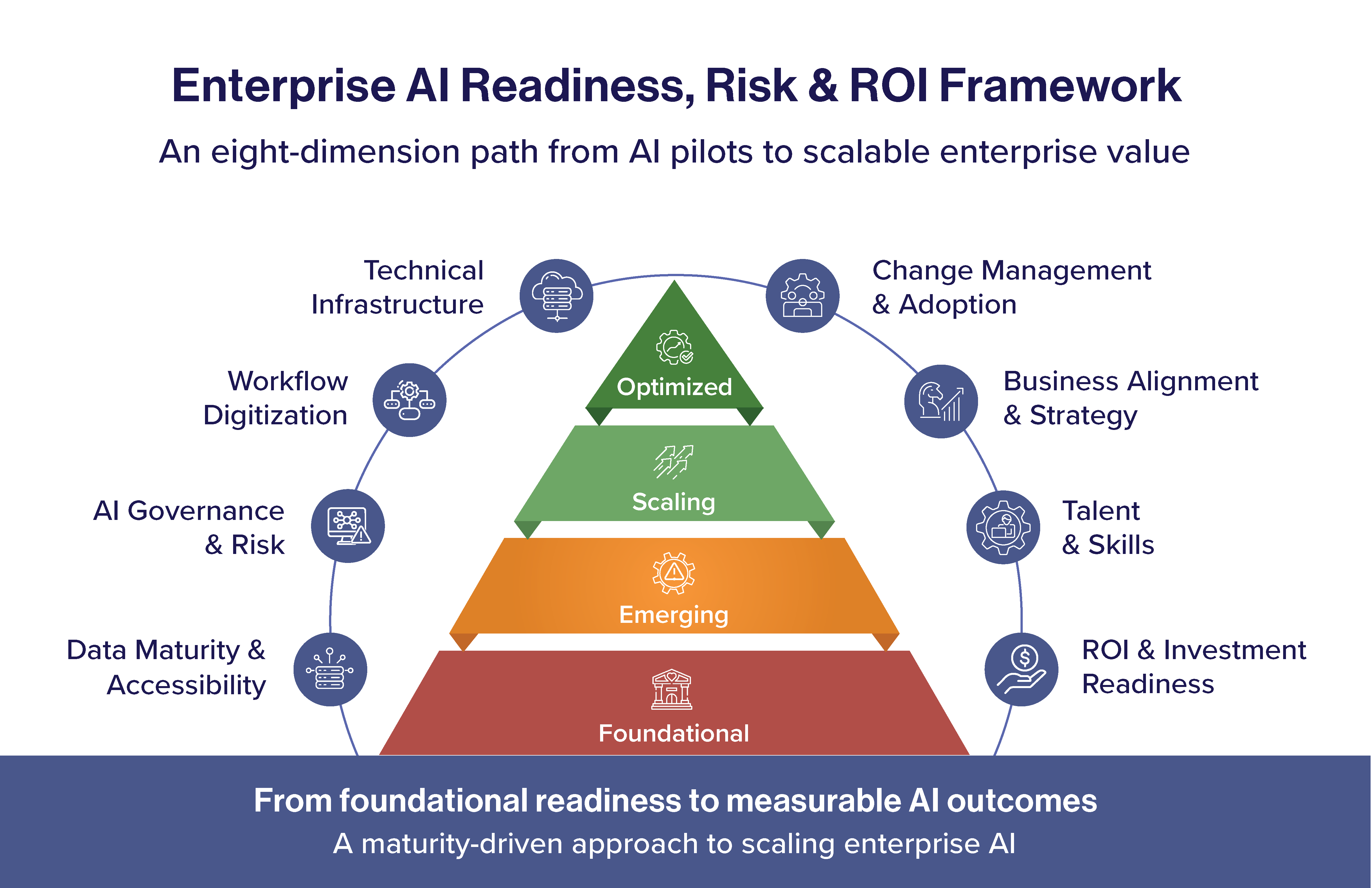

The Eight Dimensions That Define Enterprise AI Readiness

Instead of evaluating isolated tools, platforms, or individual use cases, the framework assesses AI readiness across eight enterprise dimensions. Together, these dimensions determine whether an organization can adopt AI in a way that is scalable, responsible, and tied to business outcomes.

Data Maturity & Accessibility

This dimension evaluates whether data is trusted, governed, standardized, and reusable for AI. It examines data quality, accessibility, lineage, and the ability to reliably support analytics and machine learning workloads.

AI Governance & Risk

This dimension assesses whether responsible AI principles, security controls, and compliance requirements are embedded into AI adoption. It considers policies, oversight mechanisms, risk management practices, and alignment with regulatory expectations.

Workflow Digitization

AI delivers value when it augments real business processes. This dimension evaluates whether workflows are sufficiently digitized, standardized, and measurable to support AI-driven augmentation and automation.

Technical Infrastructure

This dimension examines whether current platforms, cloud environments, and data architectures can support AI workloads at scale. It considers scalability, integration, performance, and operational resilience.

Talent & Skills

AI adoption depends on people as much as technology. This dimension evaluates AI literacy, technical capability, and change readiness across business, IT, and leadership teams.

Business Alignment & Strategy

This dimension assesses whether AI initiatives are tied directly to business priorities and outcomes. It evaluates clarity of objectives, executive sponsorship, and alignment between AI efforts and enterprise strategy

Change Management & Adoption

Even well-designed AI solutions fail if they are not used. This dimension evaluates organizational readiness for change, user adoption strategies, and the likelihood that AI-powered workflows will be embraced in practice.

ROI & Investment Readiness

This dimension examines whether AI is treated as a value portfolio. It evaluates funding models, value measurement practices, prioritization mechanisms, and the ability to track returns over time.

Each dimension is assessed across four maturity levels: Foundational → Emerging → Scaling → Optimized.

Taken together, these assessments provide a balanced and realistic view of enterprise AI readiness—highlighting not just where an organization stands, but what it will take to move forward.

Why Context Matters as Much as Scores

Two organizations can reach the same maturity level and still operate in fundamentally different realities. A numerical score alone rarely captures the constraints, dependencies, and trade-offs that shape what is actually possible in an enterprise environment.

For this reason, the framework is intentionally designed to capture context alongside quantitative scores.

During the assessment, organizations provide input such as:

- Industry and functional context

- Structured inputs related to standardization and measurement

- Real-world evidence of tools, constraints, and operating conditions

- Follow-up clarification on blockers, dependencies, and priorities

This contextual input is not optional. It is essential.

It allows the assessment to distinguish between:

- Aspirational maturity and operational reality

- Tactical gaps and structural constraints

- Short-term opportunities and longer-term investments

In practice, this approach reflects how experienced consultants assess enterprise readiness—by balancing what is documented with what is actually operating—while remaining scalable and repeatable across organizations.

From Inputs to Insight: How the Assessment Is Used

The assessment begins with structured inputs to establish a baseline maturity view. Contextual inputs are then used to validate, refine, and stress-test those results against real operating conditions.

This combined perspective enables:

- More accurate and reliable maturity scoring

- Industry-aware benchmarking

- Early identification of AI risk exposure

- Feasible, ROI-driven use case recommendations

- A realistic, staged roadmap for AI adoption

The outcome is not insight alone. It is clear prioritization and actionable direction—grounded in what an organization can realistically execute, not just what it aspires to achieve.

What Organizations Get Out of the Assessment

Organizations that complete the assessment gain a clear, enterprise-level view of where they stand and what to do next.

They receive:

- An overall AI Readiness score

- Clear strengths and gaps across all eight dimensions

- A prioritized set of AI use cases aligned to ROI and feasibility

- A consolidated view of AI risk and governance considerations

- A practical 30–60–90-day roadmap that aligns ambition with execution

This shifts AI planning from opinion-based discussion to evidence-based decision-making—giving leadership teams the confidence to invest, scale, and govern AI initiatives deliberately.

AI Readiness vs. GenAI Maturity

The AI Readiness, Risk & ROI Assessment is typically the starting point for enterprise AI adoption. It helps organizations establish whether the foundational conditions required to scale AI responsibly and deliver value are in place.

For organizations that are already deploying copilots, RAG systems, or GenAI platforms, Relevance Lab also offers a GenAI Maturity Assessment. This assessment focuses more narrowly on the realities of scaling generative AI in production environments, including:

- Enterprise-wide GenAI adoption patterns

- RAG and knowledge readiness

- Responsible GenAI controls

- Scaling copilots across teams and functions

Together, these assessments support the full AI adoption lifecycle—from establishing readiness to managing maturity at scale.

Moving from AI Intent to AI Impact

AI success today is no longer about experimentation. It is about intentional execution.

The AI Readiness, Risk & ROI Assessment Framework helps organizations:

- Reduce uncertainty before making significant investments

- Address risk early, before scaling creates exposure

- Focus on ROI from the outset, not as an afterthought

At Relevance Lab, we believe AI adoption should be practical, responsible, and measurable. This framework is designed to make that belief operational—helping organizations move from AI intent to sustained business impact.